How Can Web Scraping Cheapest Groceries Supermarket Maximize Your Savings?

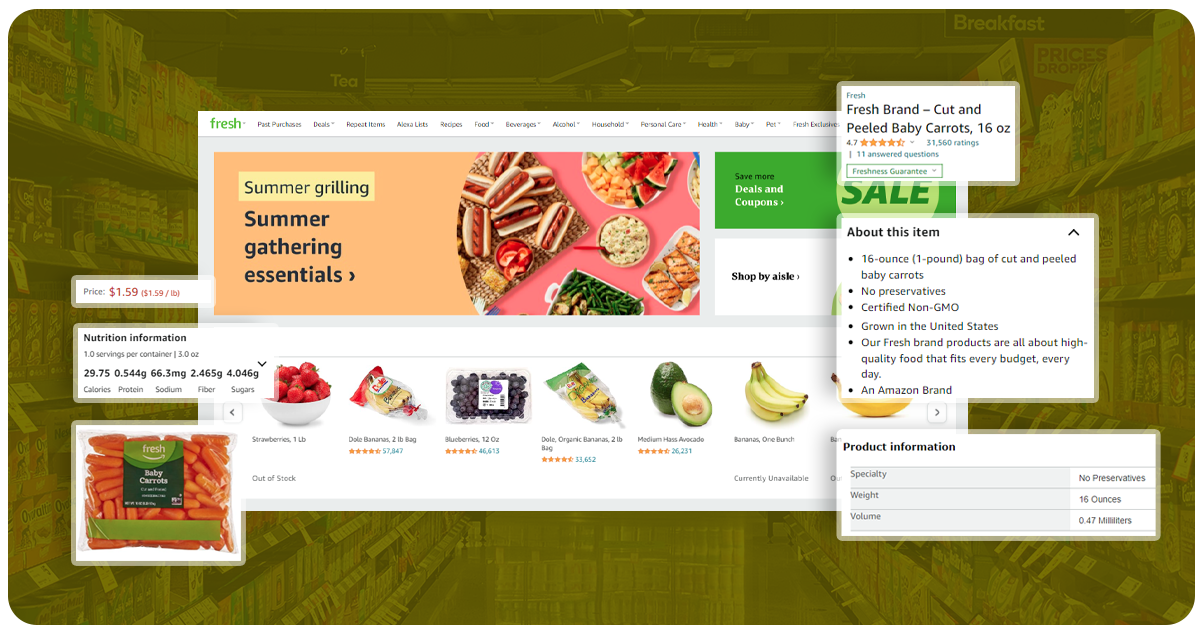

The trend of grocery data web scraping has consistently risen in recent years. As technology advances and more supermarkets and grocery stores move their inventory online, the demand for scraping tools and techniques has grown significantly. The ability to extract and analyze data from various online platforms enables consumers to compare prices, identify the cheapest supermarkets, and make informed decisions while shopping for groceries.

In this blog, we will throw the limelight on using Scrimper to find the cheapest grocery supermarket.

Scrimper is an innovative software designed to help students and other individuals find the most budget-friendly supermarket for their grocery shopping needs. The program utilizes web scraping techniques to extract essential information from various online store inventories, which are then organized and stored in a convenient CSV file. By analyzing this data, Scrimper identifies the supermarket with the lowest prices, allowing users to make informed and cost-effective choices for their shopping lists. With Scrimper, finding the best deals and final grocery pricing has never been more straightforward!

As a team of primary students, we understand life's financial challenges. We recognize that food and grocery expenses comprise a significant portion of our variable costs. However, constantly visiting multiple stores to find the best deals on each item or surviving on minimal resources like bread and water can be inconvenient and unsustainable.

That's why we embarked on a mission to develop a web scraping tool that solves this problem. Our software can browse the internet for information and scrape the cheapest supermarket for all your grocery needs. By using our tool, you can save time and effort while also saving money on your essential purchases.

Moreover, for grocery data scraping using Scrimper we have a broader vision. We want to extend our assistance to those in need who may need more financial means to afford expensive meals. Our software empowers individuals and families to make cost-effective choices and stretch their budgets further, making healthy and affordable meals accessible to everyone.

List of Data Fields

- Product Information

- Categories

- Availability

- Images

- Reviews

- Ratings

- Store Locations

- Promotions

- Discounts

Why is Scrimper the Best Companion for Scraping Groceries Data from the Cheapest Supermarket?

Scrimper is an innovative software tool designed to assist individuals, including students, find the most cost-effective supermarket for their grocery shopping needs. Using web scraping technology, Scrimper extracts data from various online supermarket inventories to identify the lowest product prices. The tool compiles this information conveniently, allowing users to make informed decisions and save money on grocery expenses. Scrimper aims to provide a user-friendly platform that empowers individuals to access affordable and budget-friendly options, especially for those facing financial constraints. With its focus on optimizing the shopping experience, Scrimper is a valuable resource for anyone looking to make intelligent and economical choices when purchasing groceries.

With our web scraping tool, we aspire to foster a community that supports one another, positively impacting people's lives by making groceries more affordable and helping individuals lead a better quality of life. We can make a difference and contribute to a more financially inclusive and supportive society.

Our initial concept involves creating a user-friendly platform where users can add individual grocery items to their shopping lists. For data collection, we plan to perform manual web scraping of grocery data from various online sources.

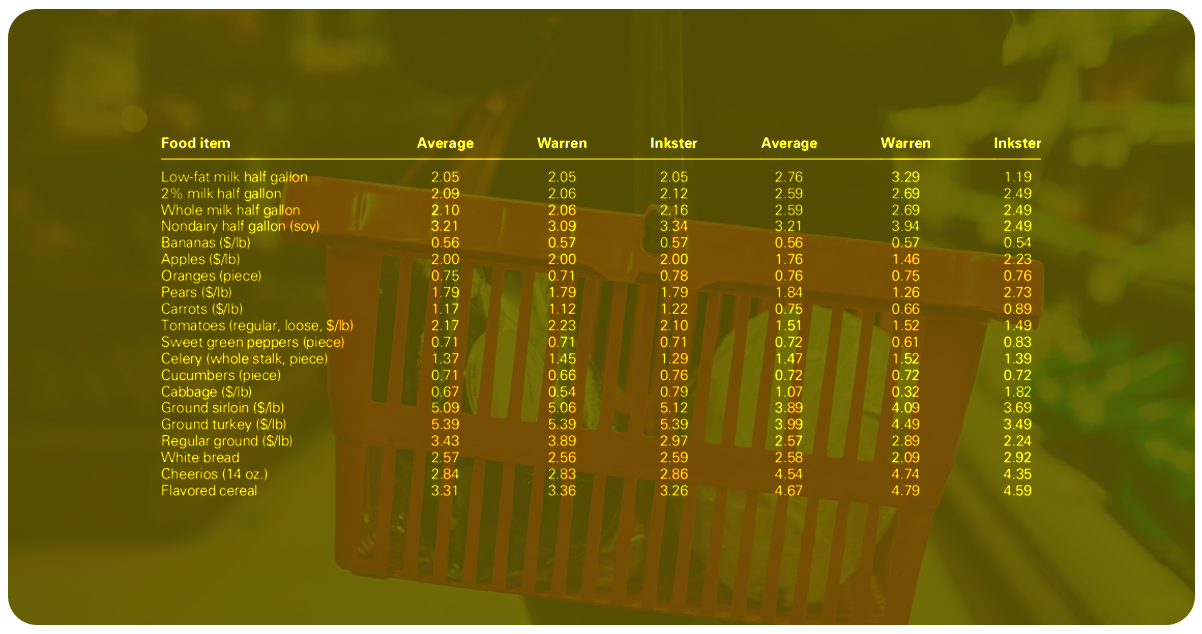

The scraping process will search for each product's lowest price at supermarkets near the user's vicinity. The extracted data will then be organized and recorded in a Pandas DataFrame, featuring columns such as "product," "price," "supermarket," and "distance."

Next, the system will filter the "price" and "distance" columns to determine the best trade-off between these criteria. Suppose the user enters a complete grocery list. In that case, the scraper will fetch the lowest prices for each product across all supermarkets, storing the total prices along with the respective supermarket names and distances in another Pandas data frame.

Finally, the platform will arrange the list based on overall cost and distance and perform another evaluation to find the optimal compromise between these two factors. This final output will be presented to the platform user, allowing them to decide on the most cost-effective and convenient supermarket to fulfill their grocery needs. Our project encountered several challenges and deviations from our initial plan, which is common in such endeavors. Our research discovered that only a few supermarkets have internet stores or comprehensive online offerings. Many others primarily display their current discounts or non-edible products, making it difficult for us to gather the necessary grocery data.

For instance, while attempting to cooperate with REWE, we encountered legal concerns about "Captcha," a tool designed to differentiate between human users and bots. It hindered our data collection efforts on their website.

Additionally, most websites did not provide the option to search for a specific region's selection, which can vary from one location to another. Using Google Maps for location-based searches would have complicated the project significantly and was beyond its scope.

Another challenge was the complexity and messiness of specific data sources, as HTML files varied widely in structure and organization.

Thus, we decided to step back and simplify our approach to address these roadblocks. We created individual scripts for each supermarket, utilizing web scraping with Python Selenium and a Chrome driver. It allowed us to extract supermarket prices from their respective websites.

Our approach involved creating a function called "choose a product," where the user selects a product from a list, and its name is as a variable. Then, on each supermarket's website, we used "driver.find_element_by_id("####").click()" to enable cookies. Subsequently, a function named "enter_website" was developed to input the selected product into the website's search field. We also included steps to apply the "price low to high" filter from the drop-down list.

We utilized XPath to locate and save the first displayed product's name and price on the HTML site to extract the necessary information. We then stored this data, along with the name of the associated store, in a CSV file.

We implemented additional code to join the scrapers and store the information in the same CSV file to streamline the process and avoid handling each supermarket's script separately.

Despite our challenges, we adapted our approach. We created a functional system that scrapes supermarket prices for a chosen product, making grocery shopping more cost-effective and accessible for users.

To streamline the data collection process, we developed separate functions for each supermarket scraper and incorporated them within a for-loop to iterate through all of them. The code is functional, except for some minor issues related to identifying specific items on certain websites. These issues are quickly addressable with a little more time and attention.

Now that we have gathered all the required information, our next step is determining the cheapest supermarket and the lowest price for all the items on the shopping list. We have written another script to filter the data in the newly formed file to achieve this.

The script includes a method called "min_sum()" that groups the supermarket items in the data frame, allowing us to perform necessary calculations efficiently. Additionally, we implemented the "favorite_store()" method, where the user prompts to specify if they have a preferred supermarket. If they do, they must choose from the list of scraped stores.

By combining these functionalities, we can present the final output to the user, indicating the most cost-effective supermarket for their shopping list and the overall lowest price for all the items. With this final step, our platform is now fully equipped to assist users in finding the best deals and making budget-conscious decisions for their grocery shopping needs. Upon the user's response regarding their favorite retailer, the system provides the price total for the shopping list and displays a menu of product names they intend to purchase at their preferred store. Conversely, if the user indicates they do not have a favorite store, the code executes the "min_sum()" method.

The "min_sum()" method calculates the price sum, store name, and product list, which presents to the user.

However, a notable challenge we currently face is that not all stores advertise all their items online. To address this issue, we have implemented a workaround. When certain items are unavailable, we substitute them with the value "Beispiel" and assign a standard price of 3€. It allows the system to continue functioning despite incomplete data.

Nevertheless, we recognize this is an aspect we must address before making the web scraper available online. Ensuring comprehensive and accurate data representation will provide users with the most reliable and valuable information during their grocery shopping experience.

For further details, contact Food Data Scrape now! You can also reach us for all your Food Data Aggregator and Mobile Restaurant App Scraping service requirements.

Know More : https://www.fooddatascrape.com/web-scraping-cheapest-groceries-supermarket-maximize-your-savings.php