How to Extract Data from Amazon and Flipkart Mobile Apps?

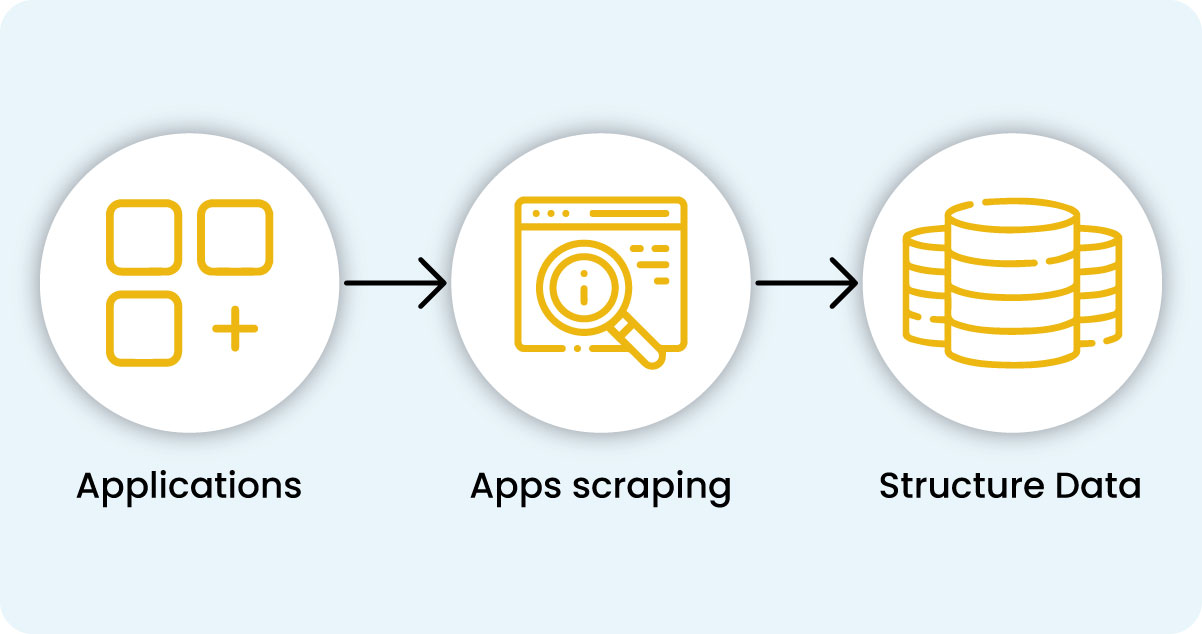

Imagine the need to retrieve a substantial volume of data from Applications efficiently without the laborious task of manually visiting each site. The solution lies in "App Scraping." App Scraping streamlines and accelerates this process significantly.

In this blog post about App Scraping with Python, we will briefly introduce App Scraping and demonstrate how to extract data from a Application, making the entire process more efficient and straightforward.

Why App Scraping?

App Scraping serves as a valuable tool for gathering extensive data from Applications. But why is there a need to amass such vast amounts of information from the App? To understand its significance, let's explore some of the applications of App Scraping:

Price Comparison: Services like Mobile App Scraping leverage App Scraping to collect data from online shopping Applications, enabling them to compare product prices effectively.

Email Address Collection: App Scraping serves as a valuable tool for gathering extensive data from Applications. But why is there a need to amass such vast amounts of information from the App? To understand its significance, let's explore some of the applications of App Scraping:

Social Media Insights: App Scraping is instrumental in extracting data from social media platforms like Twitter, aiding in identifying trending topics and user behaviors.

Research and Development: App Scraping enables the collection of extensive datasets, encompassing statistics, general information, weather data, and more from Applications. This data is then analyzed and utilized for surveys and research and development projects.

Job Listings: App Scraping is employed to aggregate details about job openings and interviews from various Applications, centralizing them in one easily accessible location for users.

App Scraping is pivotal in various domains by efficiently extracting valuable data from Applications, contributing to informed decision-making and streamlined processes. Many email marketing companies utilize App Scraping to compile email addresses, facilitating bulk email campaigns.

What Is App Scraping? Is It Legal?

App Scraping is an automated technique to extract substantial data from Applications. This data is typically unstructured, and App Scraping gathers and organizes this unstructured information into a structured format. Various methods can be employed for scraping Applications, including online services, APIs, or custom code development. In this article, we will delve into implementing App Scraping using Python.

When it comes to the legality of App Scraping, it's important to note that some Applications permit it while others do not. To determine whether a Application allows App Scraping, you can refer to the site's "robots.txt". You can locate this file through adding "/robots.txt" to the URL of the Application you intend to extract. For instance, if you are scraping Flipkart site, you can access the "robots.txt" file at www.flipkart.com/robots.txt.

Why Choose Python For App Scraping?

Python is undoubtedly renowned for its versatility, but what makes it a preferred choice for App Scraping over other languages? Let's explore the critical features of Python that make it exceptionally well-suited for App Scraping:

Ease of Use: Python's code simplicity is a standout feature. It omits the need for semicolons ";" or curly braces "{}," resulting in clean and less cluttered code, making it exceptionally user-friendly.

Rich Library Ecosystem: Python boasts an extensive library collection, including Numpy, Matplotlib, Pandas, and many more. These libraries provide various functions and tools, making Python ideal for App Scraping and data manipulation.

Dynamic Typing: Python embraces dynamic typing, eliminating the requirement to declare variable data types explicitly. This flexibility saves time and accelerates coding processes.

Readable Syntax: Python's syntax is highly legible. Reading Python code feels remarkably similar to comprehending plain English sentences. Its expressiveness, clarity, and indentation conventions distinguish between code scopes and blocks easily.

Concise Code for Complex Tasks: App Scraping aims to save time, and Python excels at this by allowing you to accomplish substantial tasks with concise code. This efficiency translates into time savings during both code development and execution.

Vibrant Community: Python boasts one of the largest and most active developer communities. This vast community ensures that assistance is readily available when encountering coding challenges. You can seek help, share knowledge, and collaborate with fellow Python enthusiasts.

Python's simplicity, robust libraries, dynamic typing, readability, code conciseness, and strong community support collectively position it as a superior choice for App Scraping and various other programming tasks.

Our e-commerce App Scraping service caters to diverse businesses, from newcomers to well-established online retailers, each with unique requirements and data dependencies.

By scraping e-commerce Applications, we empower you to efficiently extract data feeds from multiple e-commerce reference sites, partners, and channels simultaneously. We simplify the process of regularly gathering product data in your preferred format, ensuring you have access to the information you need.

(A) Data Collection

At Mobile App Scraping, our data collection practices encompass diverse platforms, each holding a unique treasure trove of valuable information. Among these platforms, we diligently gather data from some of the most prominent names in the e-commerce landscape, including Amazon, Flipkart, Big Basket, and DMart.

Renowned as the global e-commerce juggernaut, Amazon offers an expansive array of products across countless categories. Our data collection efforts on Amazon provide invaluable insights into product details, pricing strategies, and customer sentiments. This information serves as a cornerstone for competitive analysis and strategic decision-making.

Another e-commerce giant, Flipkart, provides a wealth of data regarding product availability, pricing dynamics, and consumer preferences. Access to Flipkart's data lets us discern market trends and evolving consumer behaviors, enabling us to guide our clients effectively.

Big Basket and DMart, specializing in groceries and daily essentials, provide us with data on food products, pricing fluctuations, and consumer inclinations. This data is particularly indispensable for businesses seeking to optimize their presence in the grocery segment.

By adeptly collecting data from these e-commerce platforms, Mobile App Scraping equips businesses with the insights needed to thrive in the digital marketplace. Our commitment to robust data collection enhances decision-making processes, enabling our clients to navigate the competitive landscape with confidence and precision.

• DF_Save_Format = CONST_DF_FORMAT_CSVclass Base_Spider(): ''' This is the base class for all the custom data scrappers. ''' def __init__(): ''' Base Constructor''' custom_settings = { 'LOG_LEVEL': logging.WARNING, 'FEED_FORMAT':'csv', } def save(self, df, filename, domain): path = 'outputs' if not os.path.exists(path): os.makedirs(path) if DF_Save_Format == CONST_DF_FORMAT_CSV: df.to_csv(path + '/' + domain + '_' + filename + CONST_DF_FORMAT_CSV) elif DF_Save_Format == CONST_DF_FORMAT_JSON: df.to_json(path + '/' + domain + '_' + filename + CONST_DF_FORMAT_JSON)class Amazon_Spider(scrapy.Spider, Base_Spider): ''' Spider to crawl data from Amazon sites. ''' name = 'amazon' product = None allowed_domains = ['amazon.in'] url = None max_page_count = 0 custom_settings = { **Base_Spider.custom_settings, 'FEED_URI': 'outputs/amazonresult' + DF_Save_Format } def __init__(self, product=None, url = None, max_page_count = None, *args, **kwargs): super(Amazon_Spider, self).__init__(*args, **kwargs) self.product = product self.start_urls.append(url) def parse(self, response): if response.status == 200: for url in response.xpath('//ul[@id="zg_browseRoot"]/ul/ul/li/a/@href').extract(): yield scrapy.Request(url = url, callback = self.parse_product, errback = self.errback_httpbin, dont_filter=True) def parse_product(self, response): #Extract data using css selectors for product in response.xpath('//ol[@id="zg-ordered-list"]//li[@class="zg-item-immersion"]').extract(): product_name = response.xpath('//li[@class="zg-item-immersion"]//div[@class="a-section a-spacing-small"]/img/@alt').extract() product_price = response.xpath('//span[@class="p13n-sc-price"]/text()').extract() product_rating = response.xpath('//div[@class="a-icon-row a-spacing-none"]/a/@title').extract() #product_category = response.xpath('//span[@class="category"]/text()').extract() #Making extracted data row wise for item in zip(product_name,product_price,product_rating): #create a dictionary to store the scraped info scraped_info = { #key:value 'product_name' : item[0], #item[0] means product in the list and so on, index tells what value to assign 'price' : item[1], 'rating' : item[2], 'category' : response.xpath('//span[@class="category"]/text()').extract(), } yield scraped_info def errback_httpbin(self, failure): # log all failures # in case you want to do something special for some errors, # you may need the failure's type: if failure.check(HttpError): # these exceptions come from HttpError spider middleware # you can get the non-200 response response = failure.value.response self.logger.error('HttpError on %s', response.url)class BigBasket_Spider(scrapy.Spider, Base_Spider): ''' Spider to crawl data from BigBasket sites. ''' name = 'bigbasket' allowed_domains = ['bigbasket.com'] max_page_count = 0 custom_settings = { 'LOG_LEVEL': logging.WARNING, } def __init__(self, product=None, url = None, max_page_count = None, *args, **kwargs): super(BigBasket_Spider, self).__init__(*args, **kwargs) self.product = product if self.product == CONST_PRODUCT_BEVERAGES_TEA: for i in range(2, int(max_page_count)): self.start_urls.append(url[0:(url.rfind('page=')+5)] + str(i) + url[(url.rfind('page=')+6):]) elif self.product == CONST_PRODUCT_BEVERAGES_COFFEE: for i in range(2, int(max_page_count)): self.start_urls.append(url[0:(url.rfind('page=')+5)] + str(i) + url[(url.rfind('page=')+6):]) def get_json(self, response): if self.product == CONST_PRODUCT_BEVERAGES_TEA or self.product == CONST_PRODUCT_BEVERAGES_COFFEE: return json.loads(response.body)['tab_info']['product_map']['all']['prods'] def parse(self, response): url = str(response.url) data = self.get_json(response) cols= ['p_desc', 'mrp', 'sp', 'dis_val', 'w','store_availability', 'rating_info'] df = pd.DataFrame(columns = cols) for item in data: df = df.append(pd.Series({k: item[k] if k != 'store_availability' else 'A' / in [i['pstat'] for i in item[k]] if k != 'rating_info' else item['rating_info']['avg_rating'] for k in cols}), ignore_index = True) df[['dis_val','mrp','sp']] = df[['dis_val','mrp','sp']].apply(pd.to_numeric) df['dis_val'].fillna(0, inplace=True) df['dis_val'] = (df['mrp']/100) * df['dis_val'] #display(df) self.save(df, self.product + '_' + str(url[url.rfind('page=') + 5:url.rfind('&tab_type')]), BigBasket_Spider.name)class Dmart_Spider(scrapy.Spider, Base_Spider): ''' Spider to crawl data from Dmart sites. ''' name = 'dmart' allowed_domains = ['dmart.in'] max_page_count = 0 custom_settings = { 'LOG_LEVEL': logging.WARNING, } def __init__(self, product=None, url = None, max_page_count = None, *args, **kwargs): super(Dmart_Spider, self).__init__(*args, **kwargs) self.product = product if self.product == CONST_PRODUCT_BEVERAGES or self.product == CONST_PRODUCT_GROCERRIES or self.product == CONST_PRODUCT_MASALA_AND_SPICES: self.start_urls.append(url) def get_json(self, response): if self.product == CONST_PRODUCT_BEVERAGES or self.product == CONST_PRODUCT_MASALA_AND_SPICES: return json.loads(response.body)['products']['suggestionView'] elif self.product == CONST_PRODUCT_GROCERRIES: return json.loads(response.body)['suggestionView'] def parse(self, response): data = self.get_json(response) cols= ['name', 'price_MRP', 'price_SALE', 'save_price', 'defining','buyable'] df = pd.DataFrame(columns = cols) for item in data:# df = df.append(pd.Series({k: item['skus'][0].get(k) for k in cols}), ignore_index = True) #print(' /n---------------------------------' + self.product + '-------------------------------------------') #display(df) self.save(df, self.product, Dmart_Spider.name)class Flipkart_Spider(scrapy.Spider, Base_Spider): ''' Spider to crawl data from Flipkart sites. ''' name = 'flipkart' allowed_domains = ['flipkart.in'] max_page_count = 0 custom_settings = { **Base_Spider.custom_settings, 'FEED_URI': 'outputs/flipkartresult' + DF_Save_Format } def __init__(self, product=None, url = None, max_page_count = None, *args, **kwargs): super(Flipkart_Spider, self).__init__(*args, **kwargs) self.product = product self.start_urls = [ 'https://www.flipkart.com/search?q=beverages%20in%20tea&otracker=search&otracker1=search&marketplace=FLIPKART&as-show=on&as=off', 'https://www.flipkart.com/search?q=tea&otracker=search&otracker1=search&marketplace=FLIPKART&as-show=on&as=off&page=2', 'https://www.flipkart.com/search?q=tea&otracker=search&otracker1=search&marketplace=FLIPKART&as-show=on&as=off&page=3', 'https://www.flipkart.com/search?q=coffee&otracker=search&otracker1=search&marketplace=FLIPKART&as-show=on&as=off', 'https://www.flipkart.com/search?q=coffee&otracker=search&otracker1=search&marketplace=FLIPKART&as-show=on&as=off&page=2', 'https://www.flipkart.com/search?q=coffee&otracker=search&otracker1=search&marketplace=FLIPKART&as-show=on&as=off&page=3', 'https://www.flipkart.com/search?q=spices&as=on&as-show=on&otracker=AS_Query_OrganicAutoSuggest_4_4_na_na_na&otracker1=AS_Query_OrganicAutoSuggest_4_4_na_na_na&as-pos=4&as-type=RECENT&suggestionId=spices&requestId=94f8bdaf-8357-487a-a68b-e909565784e7&as-searchtext=s%5Bpic', 'https://www.flipkart.com/search?q=spices&as=on&as-show=on&otracker=AS_Query_OrganicAutoSuggest_4_4_na_na_na&otracker1=AS_Query_OrganicAutoSuggest_4_4_na_na_na&as-pos=4&as-type=RECENT&suggestionId=spices&requestId=94f8bdaf-8357-487a-a68b-e909565784e7&as-searchtext=s%5Bpic&page=2', 'https://www.flipkart.com/search?q=spices&as=on&as-show=on&otracker=AS_Query_OrganicAutoSuggest_4_4_na_na_na&otracker1=AS_Query_OrganicAutoSuggest_4_4_na_na_na&as-pos=4&as-type=RECENT&suggestionId=spices&requestId=94f8bdaf-8357-487a-a68b-e909565784e7&as-searchtext=s%5Bpic&page=3', 'https://www.flipkart.com/search?q=grocery&sid=7jv%2Cp3n%2C0ed&as=on&as-show=on&otracker=AS_QueryStore_OrganicAutoSuggest_2_8_na_na_ps&otracker1=AS_QueryStore_OrganicAutoSuggest_2_8_na_na_ps&as-pos=2&as-type=RECENT&suggestionId=grocery%7CDals+%26+Pulses&requestId=749df6fb-1916-45df-a5f6-e2445a67b7ce&as-searchtext=grocery%20', 'https://www.flipkart.com/search?q=grocery&sid=7jv%2Cp3n%2C0ed&as=on&as-show=on&otracker=AS_QueryStore_OrganicAutoSuggest_2_8_na_na_ps&otracker1=AS_QueryStore_OrganicAutoSuggest_2_8_na_na_ps&as-pos=2&as-type=RECENT&suggestionId=grocery%7CDals+%26+Pulses&requestId=749df6fb-1916-45df-a5f6-e2445a67b7ce&as-searchtext=grocery+&page=2', 'https://www.flipkart.com/search?q=grocery+1+rs+offer+all&sid=7jv%2C72u%2Cd6s&as=on&as-show=on&otracker=AS_QueryStore_OrganicAutoSuggest_6_7_na_na_ps&otracker1=AS_QueryStore_OrganicAutoSuggest_6_7_na_na_ps&as-pos=6&as-type=RECENT&suggestionId=grocery+1+rs+offer+all%7CEdible+Oils&requestId=c19eb0d1-779c-4ae6-9b5a-3f156db29852&as-searchtext=grocery', 'https://www.flipkart.com/search?q=grocery&sid=7jv%2C30b%2Cpne&as=on&as-show=on&otracker=AS_QueryStore_OrganicAutoSuggest_4_7_na_na_ps&otracker1=AS_QueryStore_OrganicAutoSuggest_4_7_na_na_ps&as-pos=4&as-type=RECENT&suggestionId=grocery%7CNuts+%26+Dry+Fruits&requestId=06270ce4-4dc6-4669-9a72-ce708331f98b&as-searchtext=grocery', ] def parse(self, response): for p in response.css('div._3liAhj'): yield { 'Category': response.css('span._2yAnYN span::text').extract_first(), 'itemname': p.css('a._2cLu-l::text').extract_first(), 'price(₹)': p.css('a._1Vfi6u div._1uv9Cb div._1vC4OE::text').extract_first()[1:], 'MRP(₹)' : p.css('a._1Vfi6u div._1uv9Cb div._3auQ3N::text').extract(), 'discount': p.css('a._1Vfi6u div._1uv9Cb div.VGWI6T span::text').extract_first(), 'rating': p.css('div.hGSR34::text').extract_first(), 'itemsdeliverable' : p.css('span._1GJ2ZM::text').extract() }process = CrawlerProcess({ #'USER_AGENT': CONST_USER_AGENT, 'LOG_ENABLED' : False})products = [ { CONST_PLATFORM : CONST_PLATFORM_BIGBASKET, CONST_PRODUCT: CONST_PRODUCT_BEVERAGES_TEA, CONST_URL : 'https://www.bigbasket.com/product/get-products/?slug=tea&page=1&tab_type=[%22all%22]&sorted_on=relevance&listtype=ps', CONST_MAX_PAGE_COUNT:'10' }, { CONST_PLATFORM : CONST_PLATFORM_BIGBASKET, CONST_PRODUCT: CONST_PRODUCT_BEVERAGES_COFFEE, CONST_URL : 'https://www.bigbasket.com/product/get-products/?slug=coffee&page=1&tab_type=[%22all%22]&sorted_on=relevance&listtype=ps', CONST_MAX_PAGE_COUNT:'10' }, { CONST_PLATFORM : CONST_PLATFORM_AMAZON, CONST_PRODUCT: CONST_PRODUCT_BEVERAGES_TEA, CONST_URL: 'https://www.amazon.in/gp/bestsellers/grocery/ref=zg_bs_nav_0', CONST_MAX_PAGE_COUNT:'1' }, { CONST_PLATFORM : CONST_PLATFORM_DMART, CONST_PRODUCT : CONST_PRODUCT_BEVERAGES, CONST_URL : 'https://digital.dmart.in/api/v1/clp/15504?page=1&size=1000', CONST_MAX_PAGE_COUNT : '1' }, { CONST_PLATFORM : CONST_PLATFORM_DMART, CONST_PRODUCT : CONST_PRODUCT_GROCERRIES, CONST_URL : 'https://digital.dmart.in/api/v1/search/grocery?page=1&size=1000', CONST_MAX_PAGE_COUNT : '1' }, { CONST_PLATFORM : CONST_PLATFORM_DMART, CONST_PRODUCT : CONST_PRODUCT_MASALA_AND_SPICES, CONST_URL : 'https://digital.dmart.in/api/v1/clp/15578?page=1&size=1000', CONST_MAX_PAGE_COUNT : '1' }, { CONST_PLATFORM : CONST_PLATFORM_FLIPKART, CONST_PRODUCT : None, CONST_URL : None, CONST_MAX_PAGE_COUNT : '1' },]for item in products: if item[CONST_PLATFORM] == CONST_PLATFORM_DMART: process.crawl(Dmart_Spider, item[CONST_PRODUCT], item[CONST_URL], item[CONST_MAX_PAGE_COUNT]) #continue elif item[CONST_PLATFORM] == CONST_PLATFORM_AMAZON: process.crawl(Amazon_Spider, item[CONST_PRODUCT], item[CONST_URL], item[CONST_MAX_PAGE_COUNT]) #continue elif item[CONST_PLATFORM] == CONST_PLATFORM_BIGBASKET: process.crawl(BigBasket_Spider, item[CONST_PRODUCT], item[CONST_URL], item[CONST_MAX_PAGE_COUNT]) #continue elif item[CONST_PLATFORM] == CONST_PLATFORM_FLIPKART: process.crawl(Flipkart_Spider, item[CONST_PRODUCT], item[CONST_URL], item[CONST_MAX_PAGE_COUNT]) #continueprocess.start()(B) Data Cleaning

Data cleaning is crucial in our data processing pipeline for Mobile App Scraping, mainly when dealing with data from e-commerce giants like Amazon and Flipkart. Here's how we ensure clean and reliable data for our clients:

Removing Duplicates: Duplicate records can skew analysis and lead to inaccuracies. We employ deduplication techniques to eliminate redundant entries, ensuring each data point is unique.

Handling Missing Values: Only complete data can help decision-making. We carefully handle missing values by blaming them based on relevant information or flagging them for further investigation.

Standardizing Formats: Product names, categories, and other attributes often vary in format. We standardize these formats to ensure consistency across the dataset, making it easier to analyze.

Data Validation: We validate the data against predefined rules and criteria to identify inconsistencies or anomalies. Any data that doesn't conform to these standards is flagged for review.

Dealing with Outliers: Outliers can significantly impact statistical analysis. We employ various statistical methods to detect and manage outliers appropriately.

Text Preprocessing: Reviews and descriptions often contain unstructured text. To extract meaningful insights, we preprocess this text by removing stopwords, punctuation, and special characters.

Normalization: Numerical data may require scaling or normalization to be within a consistent range, ensuring fair comparisons between different attributes.

Data Integrity: We ensure that the data from Amazon and Flipkart aligns with the intended schema and structure, rectifying discrepancies.

Quality Assurance: Rigorous quality checks are conducted at every stage of the cleaning process to maintain data integrity and reliability.

By meticulously cleaning and preparing data from Amazon and Flipkart, Mobile App Scraping ensures that our clients receive high-quality, trustworthy data for their analysis, strategic planning, and decision-making needs.

(C) Data Integration

At Mobile App Scraping, we understand the importance of data integration when scraping e-commerce data from Amazon and Flipkart. Our data integration process involves several key steps to ensure that the collected data from these platforms seamlessly comes together for meaningful analysis and decision-making:

Data Collection: We initiate the process by scraping data from Amazon and Flipkart, gathering a wide range of information, including product details, pricing, reviews, and more.

Data Transformation: The data from these platforms often comes in diverse formats and structures. We transform the data into a unified format, ensuring consistency and compatibility.

Cleaning and Validation: Before integration, we rigorously clean the data to remove duplicates, handle missing values, standardize formats, and validate it against predefined rules. This step enhances data quality.

Normalization: Data normalization is applied to numerical attributes to bring them within a consistent range, facilitating fair comparisons and analysis.

Text Processing: Unstructured text data, such as product descriptions and customer reviews, undergoes text preprocessing to extract meaningful insights. This includes removing stopwords, special characters, and punctuation.

Data Alignment: We ensure that data from Amazon and Flipkart aligns with the intended schema and structure, rectifying any discrepancies that may arise during scraping.

Merge and Consolidate: The data from both sources is merged and consolidated into a single dataset or database, ensuring that it can be effectively analyzed together.

Quality Assurance: Rigorous quality checks are conducted at every stage of the integration process to maintain data integrity, reliability, and accuracy.

Data Accessibility: Once integrated, the data is made easily accessible to our clients in the format they require, whether it's for reporting, analytics, or further processing.

By meticulously integrating data from Amazon and Flipkart, Mobile App Scraping enables our clients to harness the combined power of these e-commerce platforms for informed decision-making, competitive analysis, and strategic planning. Our data integration process ensures that the data is not only comprehensive but also cohesive and ready for actionable insights.

(D) Exploratory Data Analysis and Recommendation

Exploratory Data Analysis (EDA) is a critical phase in our data-driven approach for scraping and analyzing data from Amazon and Flipkart mobile apps. This process helps us gain deep insights, identify trends, and make data-driven recommendations for our clients at Mobile App Scraping.

Data Profiling: We begin by profiling the scraped data to understand its structure, size, and basic statistics. This helps us identify any immediate anomalies or data quality issues.

Data Visualization: Visualization plays a crucial role in EDA. We create various plots, charts, and graphs to explore the data visually. This includes histograms, scatter plots, and time series plots to uncover patterns and trends.

Descriptive Statistics: We calculate key descriptive statistics such as mean, median, mode, variance, and standard deviation to summarize the data's central tendencies and variability.

Data Distribution: Analyzing data distributions helps us understand the spread of values within each attribute. We use techniques like box plots and density plots to visualize these distributions.

Correlation Analysis: Identifying correlations between different attributes enables us to understand relationships within the data. Correlation matrices and heatmaps are used to visualize these connections.

Recommendations:

Pricing Strategy: Based on EDA findings, we recommend optimal pricing strategies for our clients on both Amazon and Flipkart. This includes pricing adjustments to stay competitive and maximize revenue.

Product Recommendations: We utilize customer reviews and product data to recommend product improvements or new offerings based on customer preferences and market demand.

Market Trends: EDA helps us identify emerging market trends and consumer behaviors. We recommend strategies to capitalize on these trends and stay ahead of competitors.

Inventory Management: Efficient inventory management is critical in e-commerce. EDA insights guide inventory decisions, ensuring products are stocked based on demand patterns.

Customer Insights: Understanding customer behavior through EDA allows us to tailor marketing campaigns, improve customer experiences, and increase retention rates.

Competitive Analysis: EDA helps us analyze the competitive landscape of Amazon and Flipkart. We recommend strategies to outperform competitors and gain a more robust market foothold.

By conducting thorough EDA and providing data-driven recommendations, Mobile App Scraping empowers clients to make informed decisions, enhance their e-commerce presence, and drive business growth in online retail's dynamic and competitive world.

Conclusion

In conclusion, scraping Amazon and Flipkart mobile app data for Mobile App Scraping is a meticulous and data-driven endeavor. By harnessing the power of App Scraping, data cleaning, integration, and exploratory data analysis, we unlock valuable insights from these e-commerce giants. This data-driven approach enables us to provide actionable recommendations that empower our clients to make informed decisions, optimize pricing strategies, understand market trends, and stay competitive in the ever-evolving e-commerce landscape.

At Mobile App Scraping, we recognize that data is the key to success in the digital marketplace, and our comprehensive approach ensures that the data collected from Amazon and Flipkart is not just raw information but a strategic asset. Through rigorous data processing, we transform this information into a powerful tool that drives our clients' growth, efficiency, and profitability.

With our commitment to data integrity, quality, and actionable insights, Mobile App Scraping is a trusted partner in leveraging Amazon and Flipkart app data to navigate online retail's complexities successfully. For more details, contact Mobile App Scraping now!

Know More: https://www.mobileappscraping.com/extract-data-from-amazon-flipkart-apps.php